Navigating the 2025 landscape of no code retrieval augmented generation to create functional business agents.

- INTRODUCTION

- CONTEXT AND BACKGROUND

- WHAT MOST ARTICLES MISS

- CORE ANALYSIS

- Stage One: Selecting the No Code Stack

- Stage Two: The Data Engineering Process

- Stage Three: Crafting the System Prompt

- Stage Four: Simulation and Testing

- PRACTICAL IMPLICATIONS

- LIMITATIONS, RISKS, OR COUNTERPOINTS

- FORWARD LOOKING PERSPECTIVE

- KEY TAKEAWAYS

- EDITORIAL CONCLUSION

- REFERENCES AND SOURCES

INTRODUCTION

In the current landscape of 2025, the ability to construct a specialized artificial intelligence assistant has transitioned from a high level engineering feat to a standard business competency. Most contemporary enterprises face a significant challenge: their internal knowledge is trapped in static documents, scattered spreadsheets, and fragmented emails. Traditional methods of surfacing this information through keyword search are no longer sufficient for the rapid pace of digital operations. The “AI Chatbot” has evolved from a simple scripted responder into a sophisticated agent capable of retrieving, reasoning, and acting upon proprietary data.

This guide provides a technical roadmap for professionals who seek to bridge the gap between static information and interactive intelligence. We address the common frustration of “generic” AI that lacks specific organizational context. By focusing on the Retrieval Augmented Generation framework, this article promises a functional path to building a bot that actually understands your specific domain. You will move beyond the superficial “plug and play” tutorials and gain a deep understanding of how data ingestion, semantic search, and prompt architecture work together. The ultimate goal is to empower you to deploy an assistant that does not just talk but delivers measurable value through accuracy and efficiency. By the end of this read, the mystery of custom AI training will be replaced by a structured, manageable workflow.

CONTEXT AND BACKGROUND

The journey of the conversational interface began in the 1960s with ELIZA, a primitive system that used simple pattern matching to mimic human therapy. For decades, the industry was restricted to “Rule Based” systems. These bots operated on rigid decision trees; if a user deviated from the script, the bot failed. This created a legacy of user frustration where “chatbots” were synonymous with unhelpful automated phone menus.

The radical shift occurred with the arrival of Large Language Models. We have moved from a “Search” paradigm to a “Synthesize” paradigm. In 2025, a chatbot is no longer just a list of Frequently Asked Questions. It is a “Cognitive Layer” that sits on top of your data. Consider the analogy of a modern digital librarian. In the old days, a librarian could only point you to the correct shelf. Today, the AI librarian reads every book in the building and provides you with a cohesive, summarized answer based on the specific page and paragraph you need.

This evolution is driven by the maturation of Retrieval Augmented Generation. Instead of “retraining” a massive model, which is expensive and slow, we now give the model a temporary “short term memory” of specific documents. This allows for near instant updates and high accuracy without the need for a dedicated data science team. Historically, building such a system would require a fleet of backend developers and months of fine tuning. Now, through the proliferation of “No Code” platforms, the barrier to entry has collapsed. We are witnessing the democratization of specialized intelligence, where a small business owner can deploy a tool with the same underlying power as a global corporation. This context is vital because it explains why “custom” is the new standard. A bot that knows everything about the world but nothing about your specific pricing or policies is a liability, not an asset.

WHAT MOST ARTICLES MISS

Most instructional articles regarding chatbot development fall into the trap of oversimplification, suggesting that you can simply “upload a PDF and go.” This narrative ignores the underlying mechanics of “Semantic Chunking” and “Vector Embeddings,” which are the true engines of a successful bot. One of the most common assumptions is that “more data equals better performance.” In reality, feeding a model unorganized or contradictory data often results in “hallucinations” where the bot merges two different policies into a single, incorrect answer.

Another frequently missed detail is the “Latency versus Logic” tradeoff. Beginners often select the most powerful model available (like GPT 5) for every task. The overlooked reality is that for a simple Frequently Asked Question bot, using a high reasoning model is a waste of resources that results in five second wait times for the user. A faster, smaller model is often superior for standard retrieval.

Furthermore, many guides fail to mention the critical importance of “Metadata.” When an AI retrieves an answer, the user needs to know where that answer came from. A bot that provides a confident answer without a source citation is a trust risk. Originality in 2025 development lies in “Source Attribution” and “Reranking.” This involves a secondary process where the bot looks at the top five pieces of information it found and double checks them for relevance before answering.

Finally, there is the myth of “Permanent Training.” Most people believe the bot “learns” from every user chat in real time. In reality, modern production bots use a “Frozen” knowledge base to ensure consistency and safety. Allowing a bot to learn from live user input without a human filter is a recipe for toxic or biased behavior. Understanding that your bot is a “Knowledge Retrieval Tool” rather than a “Learning Being” is the first step toward a professional deployment. By focusing on these nuanced technical realities, you avoid the “uncanny valley” of AI where the bot sounds smart but remains fundamentally unreliable.

CORE ANALYSIS

Building an AI chatbot in 2025 follows a structured four stage framework: Tool Selection, Data Preparation, Prompt Architecture, and Deployment.

Stage One: Selecting the No Code Stack

The current market offers specialized tools for different outcomes. Your choice should be dictated by your primary data source and the complexity of your desired logic.

| Category | Recommended Tools | Best For |

| Customer Support | YourGPT, Chatbase | High traffic websites and multilingual support |

| Knowledge Base | CustomGPT.ai, Dante AI | Deep document retrieval and accuracy |

| Logic & Flows | Voiceflow, Botpress | Complex branching and human handoffs |

| Internal Tools | Zapier Central, eesel AI | Connecting to Slack, Google Drive, and CRM |

Stage Two: The Data Engineering Process

This is the most critical step. You must transform your raw data into functional context.

- Semantic Chunking: Do not upload a massive manual as a single file. Break it into logical sections of 500 to 1000 words. This ensures the bot retrieves only the relevant portion.

- Metadata Tagging: Store the source, title, and last updated date of every document. This allows the bot to say “According to the 2025 Refund Policy…”

- Vectorization: The platform will convert your text into “Vectors.” This enables the bot to understand that “shipping delay” and “late delivery” are the same concept.

Stage Three: Crafting the System Prompt

The “System Prompt” is the constitution of your bot. It defines the persona and the boundaries. A professional prompt should follow this structure:

Role: “You are a senior technical support agent for [Company Name].”

Knowledge Boundary: “Only answer questions based on the provided documents. If the answer is not in the text, say ‘I do not have that information.'”

Output Format: “Use bullet points for instructions and always cite the document name at the end.”

Stage Four: Simulation and Testing

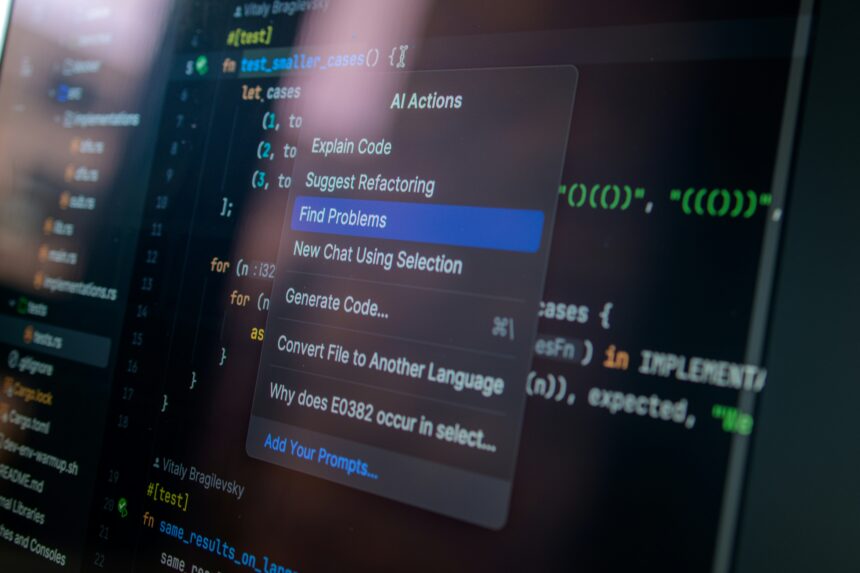

Before going live, use “Simulation Mode.” Test the bot against your past support tickets or common customer queries. You are looking for Precision (correctness) and Recall (did it find all relevant info?). In 2025, we use “Red Teaming,” where you try to “break” the bot by asking it to ignore its instructions. Only once it passes these stress tests should you generate the “Embed Code” for your website.

PRACTICAL IMPLICATIONS

The shift to custom chatbots creates immediate value across three distinct pillars: Business Operations, Professional Productivity, and Individual Learning.

For Business Operations:

The primary implication is the “Deflection of Routine Labor.” A well trained bot can resolve up to 70 percent of initial customer inquiries without human intervention. This changes the role of the support team from “answering basics” to “managing complex escalations.” Furthermore, bots act as an around the clock lead generation engine. They can qualify a potential customer at 3 AM by asking specific budget or requirement questions and then automatically booking a meeting on a salesperson’s calendar.

For Professionals:

The chatbot becomes a “Personal Knowledge Graph.” Instead of spending twenty minutes searching for a specific clause in a contract or a detail in a project brief, a professional can simply ask their private bot. This “Internal Assistant” model reduces cognitive load and prevents the “Search Fatigue” that plagues modern office work. It allows a professional to scale their expertise by “cloning” their knowledge into a bot that their team can query when they are unavailable.

For Individuals:

The practical use case is “Hyper Personalized Education.” An individual can feed a bot a collection of textbooks or lecture notes to create a custom tutor. This bot understands the specific gaps in the user’s knowledge because it has access to the exact materials the user is studying. Unlike a general AI, this “Custom Tutor” won’t bring in outside information that might confuse the student, staying strictly within the provided curriculum.

LIMITATIONS, RISKS, OR COUNTERPOINTS

While the technology has reached a high level of maturity, it is not without significant risks. The first is Data Staleness. If your company updates its pricing on Monday but forgets to update the bot’s knowledge base, the bot will confidently provide the old price on Tuesday. This requires a “Knowledge Lifecycle” management plan.

Another limitation is the Cost of Context. While No Code tools make building easy, they often charge per message or per token. If a bot becomes extremely popular, the monthly bill can scale rapidly. Additionally, there is the Hallucination Risk. No matter how well you prompt a model, there is a statistical probability that it will provide plausible but false information if the data is ambiguous.

Finally, Regulatory Compliance remains a hurdle. If you are in the European Union, your bot must comply with GDPR. This means you must have a way for users to request that their chat data be deleted. Many basic tools are still catching up to these legal requirements.

FORWARD LOOKING PERSPECTIVE

The next two years will see the rise of Agentic Workflows. We are moving away from bots that just “talk” to bots that “do.” This involves the use of the Model Context Protocol, a new standard that allows different AI systems to communicate with each other seamlessly. For example, your customer support bot will be able to talk directly to your inventory database to offer an estimated restock date.

We are also moving toward Multimodal Interaction. In 2026, a user will be able to take a photo of a broken part and upload it to the chatbot. The bot will recognize the part, find the manual, and provide a video tutorial on how to fix it. This “Visual Support” will be a game changer for manufacturing.

Lastly, the trend toward On Device Intelligence will solve many privacy concerns. Small Language Models will allow companies to run their chatbots locally on their own servers, meaning sensitive data never has to travel to the cloud. This will make custom AI assistants viable for highly regulated sectors like defense and healthcare.

KEY TAKEAWAYS

- Purpose over Platform: Always define exactly what the bot needs to do before selecting a tool.

- Clean Data is Mandatory: The quality of the chunks you upload dictates the quality of the answers.

- Use RAG for Accuracy: Retrieval Augmented Generation is the standard for 2025 to prevent hallucinations.

- Red Team Your Bot: Attempt to break your assistant before your customers do to ensure safety.

- Monitor and Iterate: A chatbot is a living project that requires regular knowledge updates to stay relevant.

- Citations Build Trust: Ensure your bot always provides a source for its claims to maintain authority.

EDITORIAL CONCLUSION

Building your first AI chatbot is no longer a technical hurdle but a strategic opportunity. As we have explored, the transition from “General AI” to “Specialized Agent” is the defining shift of 2025. By following a structured path of data preparation and careful prompting, you can create a tool that serves as a tireless, expert extension of your business or personal brand.

Ultimately, the goal of these systems is not to replace human conversation but to enhance it. By automating the retrieval of facts and the answering of routine questions, we allow humans to return to the high value tasks of empathy, complex problem solving, and creative strategy. The future belongs to those who can effectively “delegate” the repetitive to the machine, and there is no better place to start than by building your first custom assistant today.